Sensitivity to Unobserved Confounding in Studies with Factor-structured Outcomes

9/28/23

Slides and Paper

Slides: afranks.com/talks

Sensitivity to Unobserved Confounding in Studies with Factor-structured Outcomes, (JASA, 2023) https://arxiv.org/abs/2208.06552

Joint work with Jiajing Zheng (formerly UCSB), Jiaxi Wu (UCSB) and Alex D’Amour (Google)

Causal Inference From Observational Data

Consider a treatment

Interested in the population average treatment effect (PATE) of

- In general, the PATE is not the same as

Confounders

Confounding bias

- Observed data regression of

- We try to condition on as many observed confounders as possible to mitigate potential confounding bias

- Commonly assumed that there are “no unobserved confounders” (NUC) but this is unverifiable

- Sensitivity analysis is a tool for assessing the impacts of violations of this assumption

A Motivating Example

A Motivating Example

The Effects of Light Alcohol Consumption

Observational data from the National Health and Nutrition Examination Study (NHANES) on alcohol consumption.

Light alcohol consumption is positively correlated with blood levels of HDL (“good cholesterol”)

Define “light alcohol consumption’’ as 1-2 alcoholic beverages per day

Non-drinkers: self-reported drinking of one drink a week or less

Control for age, gender and indicator for educational attainment

HDL and alcohol consumption

Call:

lm(formula = Y[, "HDL"] ~ drinking + X)

Residuals:

Min 1Q Median 3Q Max

-5.0855 -0.6127 -0.0512 0.6389 4.2383

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.225550 0.091105 2.476 0.013412 *

drinking 0.597399 0.091917 6.499 1.11e-10 ***

Xage 0.006409 0.001452 4.415 1.09e-05 ***

Xgender 0.689557 0.049426 13.951 < 2e-16 ***

Xeduc 0.194338 0.051161 3.799 0.000152 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.9216 on 1434 degrees of freedom

Multiple R-squared: 0.1531, Adjusted R-squared: 0.1507

F-statistic: 64.81 on 4 and 1434 DF, p-value: < 2.2e-16Blood mercury and alcohol consumption

Call:

lm(formula = Y[, "Methylmercury"] ~ drinking + X)

Residuals:

Min 1Q Median 3Q Max

-2.3570 -0.7363 -0.0728 0.6242 4.1127

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.442044 0.096385 4.586 4.91e-06 ***

drinking 0.364096 0.097244 3.744 0.000188 ***

Xage 0.008186 0.001536 5.330 1.14e-07 ***

Xgender -0.062664 0.052290 -1.198 0.230966

Xeduc 0.269815 0.054126 4.985 6.95e-07 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.975 on 1434 degrees of freedom

Multiple R-squared: 0.05209, Adjusted R-squared: 0.04945

F-statistic: 19.7 on 4 and 1434 DF, p-value: 8.41e-16Residual Correlation

Pearson's product-moment correlation

data: hdl_fit$residuals and mercury_fit$residuals

t = 3.7569, df = 1437, p-value = 0.0001789

alternative hypothesis: true correlation is not equal to 0

95 percent confidence interval:

0.04718758 0.14953581

sample estimates:

cor

0.0986225

Residual correlation might be indicative of confounding bias

Sensitivity Analysis

NUC unlikely to hold exactly. What then?

Calibrate assumptions about confounding to explore range of causal effects that are plausible

Robustness: quantify how “strong” confounding has to be to nullify causal effect estimates

- Well established methods for single outcome analyses

Multi-outcome Sensitivity Analysis

- If we measure multiple outcomes, is there prior knowledge that we can leverage to strengthen causal conclusions?

- What might residual correlation in multi-outcome models mean for potential for confounding?

How do results change when we assume a priori that certain outcomes cannot be affected by treatments?

- Null control outcomes (e.g. alcohol consumption should not increase mercury levels)

Standard Assumptions

Assumption (Latent Ignorability)

U and X block all backdoor paths between T and Y (Pearl 2009)

Assumption (Latent positivity)

f(T = t | U = u, X = x) > 0 for all u and x

Assumption (SUTVA)

There are no hidden versions of the treatment and there is no interference between units

Single-outcome Sensitivity Analysis

Result (Cinelli and Hazlett 2020)

Assume the outcome is linear in the treatment and confounders (no interactions). Then the squared omitted variable bias for the PATE is

Single-outcome Sensitivity Analysis

Result (Cinelli and Hazlett 2020)

Assume the outcome is linear in the treatment and confounders (no interactions). Then the squared omitted variable bias for the PATE is

Single-outcome Sensitivity Analysis

Result (Cinelli and Hazlett 2020)

Assume the outcome is linear in the treatment and confounders (no interactions). Then the squared omitted variable bias for the PATE is

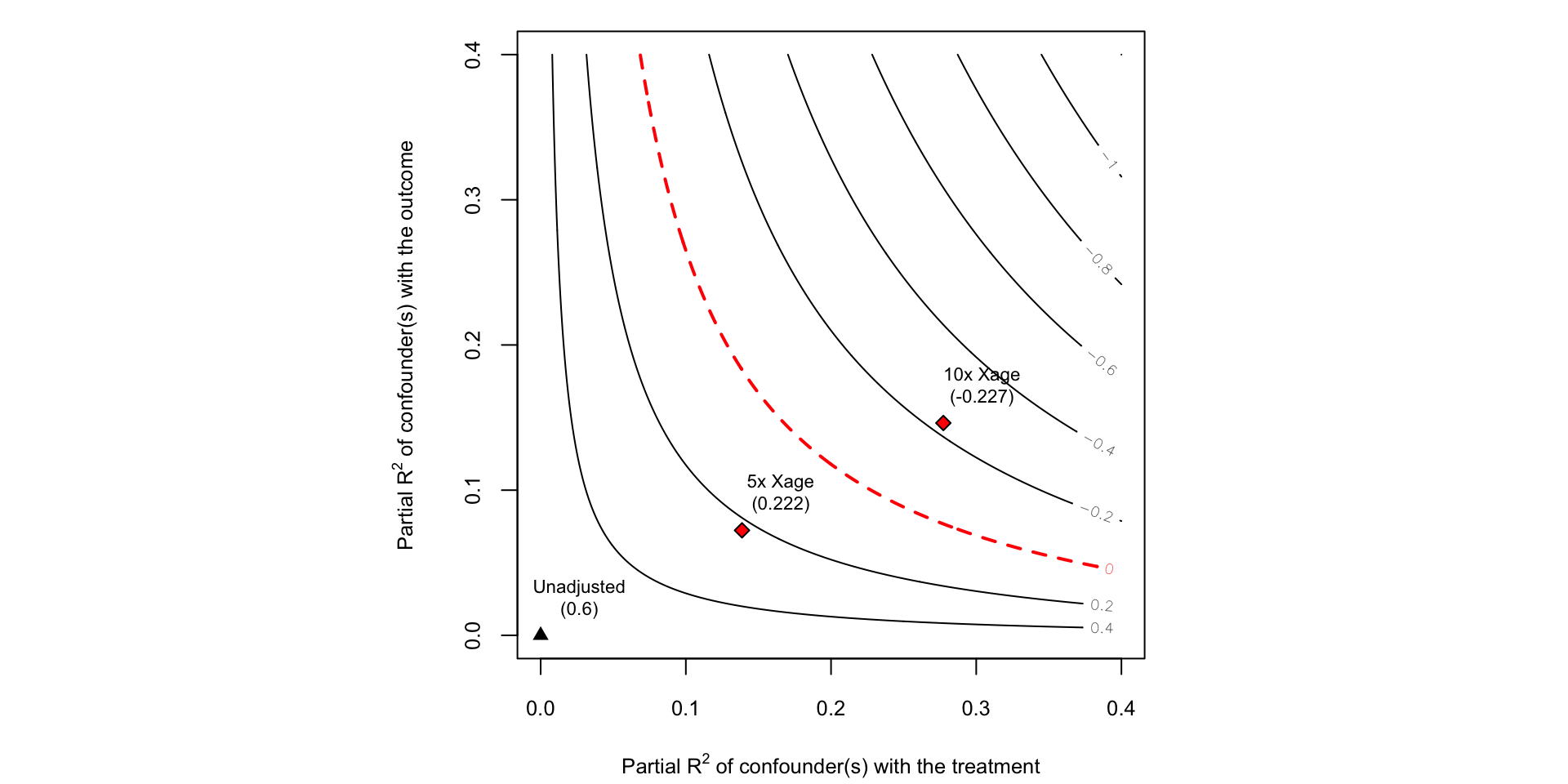

Robustness

- How big do

Calibrating Sensitivity Parameters

What values of

Can use observed covariates to generate benchmark values:

Compute

Compute

Use domain knowledge to reason about most important confounders

Sensitivity of HDL Cholesterol Effect

From the sensemakr documentation (Cinelli, Ferwerda, and Hazlett 2020)

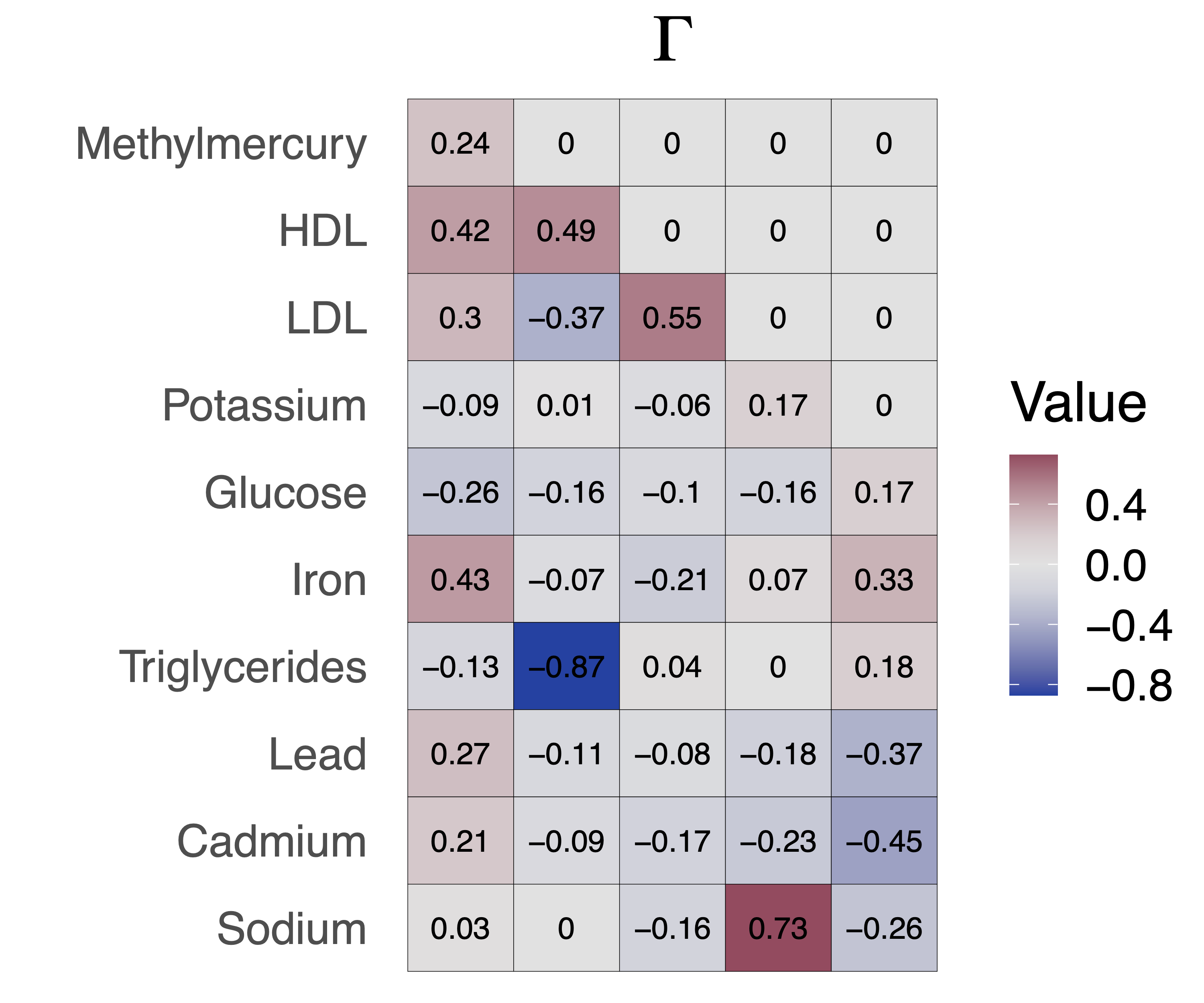

Models with factor-structured residuals

Assume the observed data mean and covariance can be expressed as follows:

A Structural Equation Model

- This SEM is compatible the factor structured residuals,

A Structural Equation Model

Confounding bias is

- User specified sensitivity parameters

A Sensitivity Specification

- Interpretable specification for

Define

Multi-Outcome Assumptions

Assumption (Homoscedasticity)

Assumption (Factor confounding)

The factor loadings,

To identify factor loadings,

Bounding the Omitted Variable Bias

Theorem (Bounding the bias for outcome

Given the structural equation model, sensitivity specification and given assumptions, the squared omitted variable bias for the PATE of outcome

The bound on the bias for outcome

A single sensitivity parameter,

Bounding the Omitted Variable Bias

Theorem (Bounding the bias for outcome

Given the structural equation model, sensitivity specification and given assumptions, the squared omitted variable bias for the PATE of outcome

The bound on the bias for outcome

A single sensitivity parameter,

Bounding the Omitted Variable Bias

Theorem (Bounding the bias for outcome

Given the structural equation model, sensitivity specification and given assumptions, the squared omitted variable bias for the PATE of outcome

The bound on the bias for outcome

A single sensitivity parameter,

Reparametrizing

Fix a

- Related to the marginal sensitivity model (Tan 2006)

Null Control Outcomes

- Assume we have null control outcomes,

- Need at least

Null Control Outcomes

Theorem (Bias with Null Control Outcomes)

Assume the previous structural equation model and sensitivity specification. Then the squared omitted variable bias for the PATE of outcome

Null Control Outcomes

Theorem (Bias with Null Control Outcomes)

Assume the previous structural equation model and sensitivity specification. Then the squared omitted variable bias for the PATE of outcome

Null Control Outcomes

Theorem (Bias with Null Control Outcomes)

Assume the previous structural equation model and sensitivity specification. Then the squared omitted variable bias for the PATE of outcome

- If

Null Control Outcomes

Theorem (Bias with Null Control Outcomes)

Assume the previous structural equation model and sensitivity specification. Then the squared omitted variable bias for the PATE of outcome

- Ignorance about the bias is smallest when

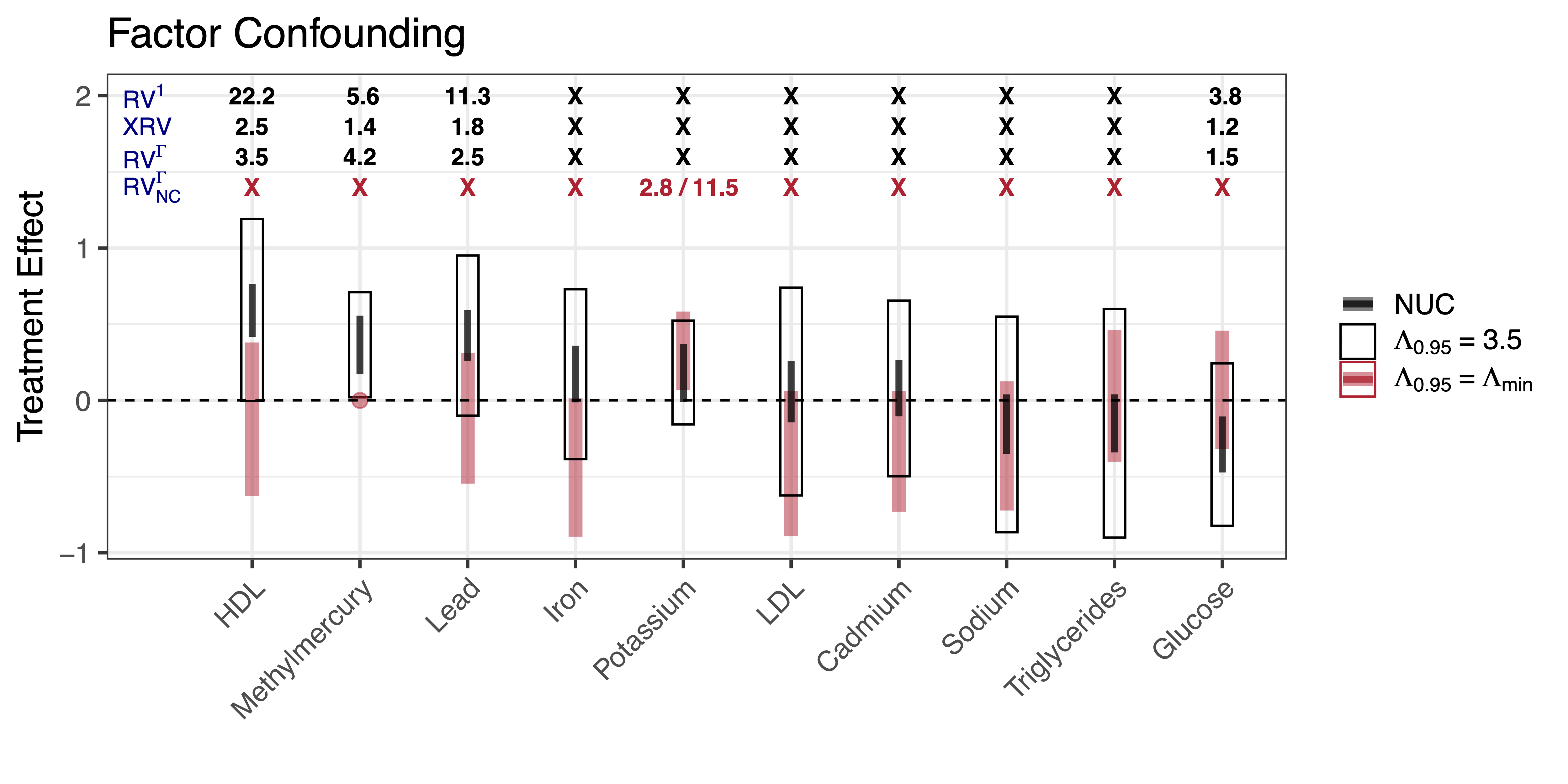

Robustness under Factor Confounding

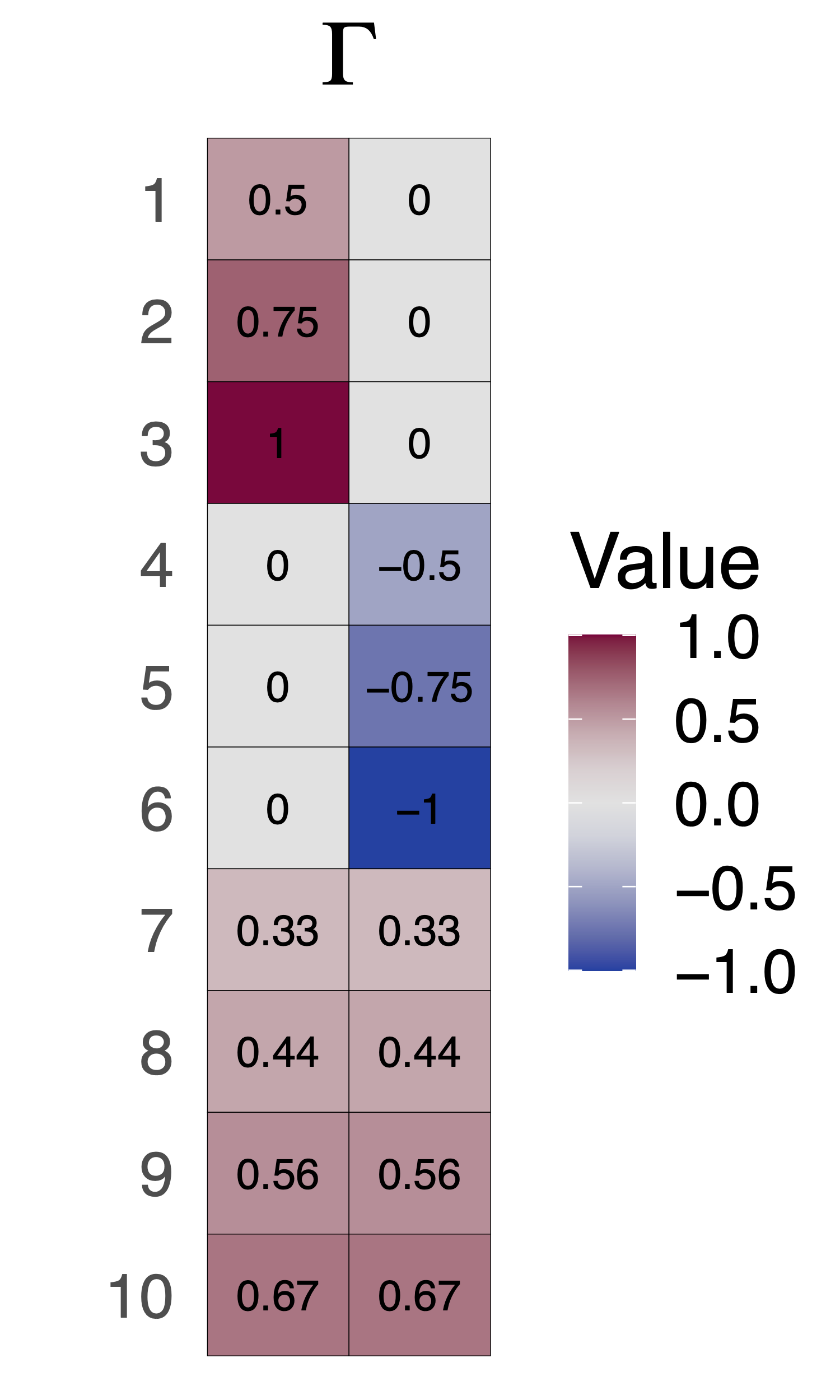

Simulation Study

Gaussian data generating process

Simulation Study

Fit a Bayesian linear regression on the 10 outcomes given then treatment

Assume a residual covariance with a rank-two factor structure

Plot ignorance regions assuming

Plot ignorance regions assuming

Simulation Study

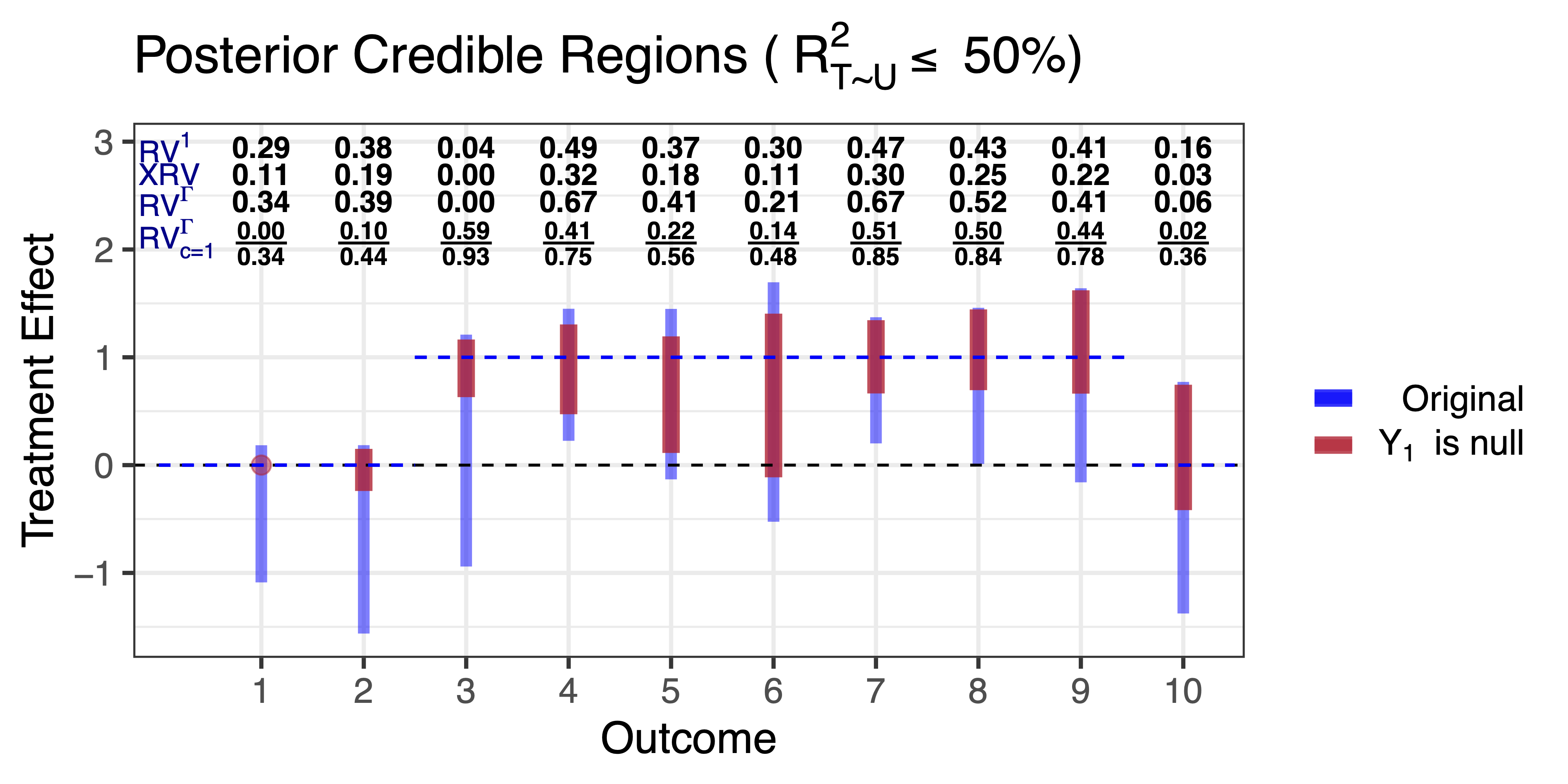

The effects of light drinking

- Measure ten different outcomes from blood samples:

- natural: HDL, LDL, triglycerides, potassium, iron, sodium, glucose

- environmental toxicants: mercury, lead, cadmium.

- Measured confounders: age, gender and indicator for highest educational attainment

- Residual correlation in the outcomes might be indicative of additional confounding bias

The effects of light drinking

Model:

Residuals are approximately Gaussian

Fit a multivariate Bayesian linear regression with factor structured residuals on all outcomes

- Need to choose rank of

- Consider posterior distribution of

Benchmark Values

Use age, gender and an indicator of educational attainment to benchmark

For gender and education indicators the odds change was between

Assume light drinking has no effect on methylmercury levels

Results: NHANES alcohol study

Takeaways

Prior knowledge unique to the multi-outcome setting can help inform assumptions about confounding

Sharper sensitivity analysis, when assumptions hold

Negative control assumptions can potentially provide strong evidence for or against robustness

Future directions

- Identification with multiple treatments multiple outcomes

- Collaboration on effects of pollutants on multiple heath outcomes

- Sensitivity analysis for more general models / forms of dependence.

References

Thanks!

Jiaxi Wu (top, UCSB)

Jiajing Zheng (middle, formerly UCSB)

Alex D’Amour (bottom, Google Research)

Sensitivity to Unobserved Confounding in Studies with Factor-structured Outcomes https://arxiv.org/abs/2208.06552